This case study is about the purchase order anonymization software search for a defense company.

Client’s business problem

With the dematerialization law, the group invested in a cloud solution for managing the supplier invoice data (supplier portal). Due to the group sensitive activities and recent protection law published we need to put in place a process / tool for anonymizing the data in the PO (Purchase Order) description lines before sending to the supplier and uploading the PO data in the supplier portal.

Scope of the problem

Considered market is France, but could be extended in the future especially in Spain Teams impacted are P2P and Finance O2C

Objectives and KPI to improve

We (local stakeholders from France entities) need to manage a list of sensitive keywords. This list will evolve for some business reason frequently (~1 or 2 times per month)

We need a tool that will screen the PO lines in several ERPs such as SAP ECC6, Oracle EBS 11i or R12… before the approval of the PO based on the list mentioned before

Each time a key word indicated in the list is found in the PO lines, it needs to be replaced by “ ******** ” or “xxxxxxx”…

When the modification will be done, then the PO will be sent for approval and then sent to the supplier or somewhere else.

Constraints to be taken into account

Tech Ecosystem to integrate to :

-5 ERPs : Oracle eBusiness Suite 11i or R12, SAP ECC6 (several instance)

Infrastructure : as we talk about sensitive data > solution must be available On Premises

Regulatory requirements: None

Timing Expect to cleanse the data before end of 2023, can be phased in 2023 and Q1 2024

Solutions were assessed on three fronts:

-Suitability for the use case

-Vendor reliability and ability to scale

-ROI

List of relevant vendors:

Talend Alteryx Dataiku KNIME Automation Anywhere UiPath TIMi Oracle SS&C Blue Prism Incorta Denodo TIMi

In just 3 weeks, Vektiq AI-powered platform pinpointed the ideal solution for a finance and procurement tribe leader.

Ready to start your search? Link to our self-service platform here

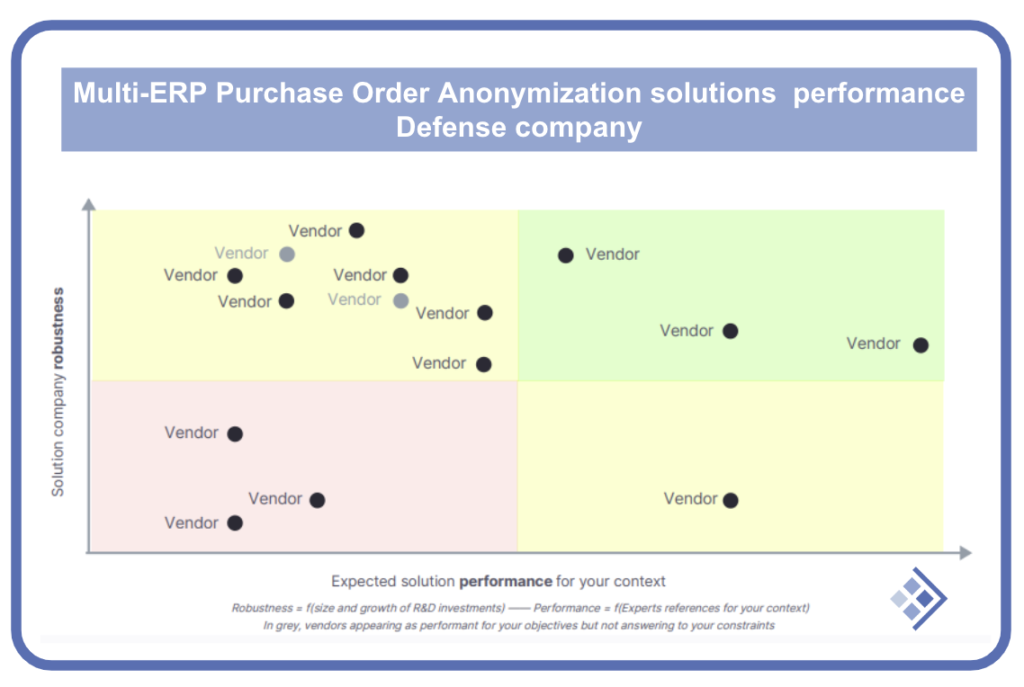

Anonymized Performance Matrix

Vendor’s answers and comments

Alteryx answer

Alteryx empowers analysts to seamlessly access your complete ERP landscape directly within the Alteryx platform. This allows them to efficiently extract, blend, restructure, analyze, and export data, significantly improving their workflow speed.

One of Alteryx’s standout features is its ability to liberate data from PDFs and images. Analysts can extract data embedded in system-generated PDFs using the PDF to Text feature. Furthermore, they can harness the impressive Optical Character Recognition (OCR) capabilities of Google Tesseract through the Image to Text tool, making it possible to extract content from various documents, such as invoices, business cards, forms, and more.

Alteryx also enables the development of image classification models and the application of these models to new images using Image Recognition. Additionally, it provides automated entity name extraction from text with Named Entity Recognition (NER).

For users dealing with SAP and Oracle systems, Alteryx offers a range of connectors that facilitate metadata extraction from SAP or Oracle, seamlessly integrating this information into the Alteryx environment. These connectors are compatible with various SAP components like SAP BW, SAP Business Suite, SAP ECC, SAP CRM, SCM, SAP S/4HANA On-Premise edition, and Oracle Databases.

Alteryx Designer stands as the industry leader in data preparation, blending, and analytics, offering a user-friendly interface with drag-and-drop capabilities, reducing the reliance on coding and expediting each stage of the analytics process. The public price for this powerful solution is €5,195 per user.

With the Alteryx Server at the core of your analytics ecosystem, both data scientists and citizen users can focus on uncovering answers to previously unknown questions. Simultaneously, IT professionals can be confident in the platform’s visibility, control, and auditability, making it an ideal choice for managing self-service analytics at scale. Pricing for Alteryx is based on CPU sizing, determined by the specific architecture deployed.

What others say on Alteryx

Alteryx is not just a data preparation solution; it’s a robust self-service platform with a wide array of functionalities that can effectively address various use cases. This includes capabilities to read and write Excel files, as well as read and write Oracle databases, among others.

However, it’s important to note that Alteryx falls short of GDPR compliance. Specifically, when utilizing Alteryx, the typical workflow involves designing a new data transformation on your local machine, followed by “publishing” it for automatic execution on your main server. The issue with Alteryx’s publication mechanism is that it first transfers your data transformation, along with any sensitive data, such as Excel and CSV files, to their cloud servers in the USA before replicating it to your on-premises server. This data transfer approach can pose potential GDPR compliance concerns.

Furthermore, Alteryx lacks a connector for SAP ERP, offering only a connector for Sybase SAP, which is distinct from SAP ERP systems. In contrast, TIMi stands out as one of the very few tools with a dedicated SAP ERP connector.

Additionally, Alteryx’s connection to SharePoint has reliability issues, commonly failing after approximately two weeks. Users often find themselves needing to manually re-authenticate to restore functionality. These interruptions in SharePoint connectivity can lead to operational disruptions.

Talend answer:

Integrated Group ETL and Data Quality Solution.

Anonymization capabilities are already part of the existing license.

Access to the defense company Group’s expert Talend team.

E-learning platform accessible to all the defense company users, featuring modules on anonymization.

Existing use cases with SAP (the defense company).

Seamless connectivity to both SAP and Oracle systems.

Diverse anonymization options available.

Data Quality tools for data cleansing, merging, and enhancement.

Established use cases in financial and procurement data optimization, including supplier data cleaning, order confirmations, and invoice validation.

What others say on Talend

However, it’s important to note that, while Talend offers a comprehensive solution, it may be perceived as slow in certain scenarios, although this may not be a critical issue in this specific use case.

Talend currently lacks direct connectors to SAP ERP and SharePoint, necessitating the use of third-party providers for integration.

Talend is recognized as an enterprise data integration and management platform, consistently earning high rankings in analyst reports from Gartner and Forrester. It boasts a mature product set suitable for both on-premise and cloud deployments.

The platform excels in data integration, including integration with big data and Hadoop, data quality, data management (including real-time streaming), and data governance. These capabilities are primarily driven by IT professionals.

Additionally, Talend offers a low-cost or even free self-service data preparation product called Talend Open Studio, providing flexibility to users with varying needs.

The task at hand involves anonymizing a specified list of words within documents originally stored in various ERPs. To accomplish this task using Dataiku, you can follow these steps:

Dataiku answer

Establish Data Connections: First, connect your Dataiku project to the different ERPs where the Purchase Orders are stored. Dataiku offers built-in connectors for a wide range of ERP systems, such as SAP (using the OData protocol) and Oracle databases (with the Oracle JDBC connector). If a connector is not native to Dataiku or you need to extract data from specific file types like PDF documents, you can create a custom connection as a Python plugin. This empowers non-coders to seamlessly access the data source.

Connect to Anonymization Source: Additionally, connect Dataiku to the file or database containing the list of words you wish to anonymize.

Build a Data Flow: Create a data flow that includes:

Extracting text from the input documents.

Replacing the words from the list with anonymized values, such as stars (e.g., “*”). Dataiku provides string manipulation capabilities and supports Python code for text replacement. You can also use external libraries like PyPDF2 to reconstruct PDF documents with the modified text content. Dataiku’s Python code capabilities allow you to integrate these libraries and create a new PDF with the desired changes. This code can be encapsulated into a plugin for easy reuse, enabling the anonymization of new documents with the same or different lists of words to encrypt.

Create an Automated Scenario: Set up a scenario to automate the update of the list of words to be anonymized. Dataiku can integrate the new list of words based on your chosen trigger.

Develop a User-Friendly Dataiku Application: To make the anonymization process accessible to non-data experts, build a Dataiku application. This application will enable users to anonymize their documents with a simple interface and provide a preview of the anonymized results.

It’s worth noting that Dataiku is recognized for its collaborative capabilities in data science projects. Some users find it to be a more cost-effective solution compared to Alteryx, making it a popular choice in various data science and analytics scenarios.

What others say on Dataiku

Dataiku is known as a collaborative solution for data science projects, and sometimes customers consider that Dataiku is less expensive than Alteryx

Blue Prism answer

We propose that the ideal solution for your use case involves a combination of SS&C Blue Prism’s on-premise Robotic Process Automation (RPA) and SS&C Blue Prism’s on-premise Intelligent Document Processing (IDP) product, known as Decipher.

Here’s a detailed breakdown of the solution:

Document Collation and Ingestion:

SS&C Blue Prism’s RPA will be utilized to gather Purchase Order (PO) documents from various sources, including email, folder locations, or web form uploads.

These documents will be ingested into Decipher IDP.

Document Quality Check and OCR:

Decipher IDP, with its multi-language capabilities, will perform several tasks:

Verify document formatting (e.g., orientation, despeckling, deskewing) to ensure accuracy.

Perform Optical Character Recognition (OCR) on the documents to extract PO information and PO line details.

Conversion to Electronic Form:

Decipher will convert the extracted PO lines into an electronic format.

Integration with SS&C RPA:

The electronic PO data will be exported back to SS&C RPA for further processing.

PO Line Anonymization:

The RPA tool will assess the extracted PO lines against predefined formats to determine which lines require anonymization.

Structured Data Output:

The solution will output all relevant information in a structured electronic format, ready for automatic posting to systems such as SAP ECC or Oracle. Alternatively, it can be routed to an authorization user for approval before posting.

Pricing for this solution is based on the number of concurrent RPA sessions (commonly referred to as Digital Workers – DW) needed to handle your expected volumes. A fair-use maximum for Decipher IDP is set at 4,000 sheets of A4 per concurrent session (DW) per month. Based on your described volume of 100,000 sheets per month, you would require 25 SS&C Blue Prism licenses (DW).

It’s important to note that SS&C Blue Prism offers an alternative solution for the IDP component known as Document Automation, which deals with handwriting recognition. However, this is a SaaS-based solution and is not expected to be within the scope of your requirements.

What others say on Oracle

OCA provides a good integration with EBS, but if the client needs PO anonymization from SAP ECC as well OCA would not be compatible. OCA also requires significant data engineering overhead.

What others say on DataBricks

No on premises. Databricks provides great technology for data engineering and ML, but still requires a lot of ETL, batch processing of new data, and IT resources.

Based on our knowledge, this solution does not have prebuilt data applications for Oracle and SAP so PO records can not be extracted automatically from these ERP’s, and will require data engineering overhead to set up the pipeline and batch processing each time the ERP database is updated with new records.

What others say on UIPath

Also need for combined RPA and OCR

What others say on Snowflake

No on premises. Snowflake is a good data warehouse, but does not transform the modern data architecture of ETL processing between each stage of the data pipeline. Consumption based pricing can be costly.

Based on our knowledge, this solution does not have prebuilt data applications for Oracle and SAP so PO records can not be extracted automatically from these ERP’s, and will require data engineering overhead to set up the pipeline and batch processing each time the ERP database is updated with new records.

What others say on Automation Anywhere

Combined RPA and OCR tool

What others say on Abbyy

Class leading OCR capabilities

Will still need RPA to post into SAP/Oracle

What others say on Kofax

Combined RPA and OCR tool

Incorta Answer

Incorta has a prebuilt data applications for Oracle EBS and SAP ECC which can automatically extract PO records directly from the source without the need for ETL or reshaping the data. Incorta can implement a rule on a list of keywords found in the PO name column to anonymize based on requirements.

Incorta is the only smart lakehouse on the market which stores data in an updatable parquet delta format, which eliminates the need for ETL of data mapping data directly to the source. Incorta has robust native connections to ERP’s and business systems such as Oracle, SAP, Salesforce, Workday, etc, with prebuilt data models and insights that increase time to value and insight across many verticals.

Pricing model for on-prem and cloud solution is based on an annual subscription depending on: Computing Capacity Small, Medium, Large, XLarge, 2XLarge) depending on data size used for use case (no capacity on storage); and Capabilities Tier) – Standard and Enterprise. Pricing model is transparent and predictable, not consumption based.

Denodo answer

The Denodo Platform, powered by Data Virtualization, is the leading Data Integration, Management and Delivery Platform using a Logical Approach. Denodo supports on-premise, cloud and hybrid deployment models. In all these scenarios, Denodo installation can be scaled for high-concurrency requirements. The Denodo Platform decouples the heterogeneities of the underlying data sources from the upper layer client applications, providing seamless access to all types of data to serve any informational or operational business need. Denodo platform provides 150 data sources, in particular SAP ECC60 and Oracle eBusiness.

Data virtualization provides consumer applications with a single logical point for accessing data from sources, avoiding complex, difficult-to-maintain point-to- point connections and decoupling data consumers from data sources. In the data virtualization layer it is possible to generate multiple logical business views over the same data to suit the specific requirements of every business user and application, with their specific naming conventions and delivering the data in the format most suitable to each of them.

Global Security Policies is a Denodo feature that allows you to define security restrictions that apply to all/some users over all/several views that verify certain conditions. For instance, you can define a global policy so only the users with a certain role can query the views that have the tag “sensitive”. Denodo Design studio is a web interface which allows to register SAP tables (and source structures in general), apply a tag on sensitive fields and create a policy rule based on this tag. The user maintain a file or table to specify the list of sensitive words to hide.

The result is displayed in any third party tool compatible with ODBC/JDBC drivers like Excel or a reporting Tool or an API.

Timi answer

We’ll deploy our solution, TIMi, on one of your machine (you requested a “on Premises” solution). We need a PC with a Windows-OS 2019 server or above), with 16GB of RAM. TIMi installation is 5 minutes.

The technical details of the solution are described here: https://timi.eu/private/the defense company.pdf

Pricing model:

The yearly license fee to install TIMi on a server is usually around XXK€. Since we are solving a very easy small &limited use case, we can reduce this fee to XXK€.

On top of the license fee, we need to create a few data simple transformations for the defense company (that’s the coding part). Since this is a very simple problem to solve, we expect 15 workdays for this part (at a price of XK€ per day). 5 days will be spent with the stakeholders to understand their exact needs. 5 days will be spent to design the solution. 5 days as a reserve and for administrative tasks. Alternatively, we can train one of your engineers so that he will be able to improve, upgrade and maintain the proposed solution.

The total fee is thus XXK€ the first year (because of the little coding work) and XXK€ for the other years.